What DBT-GA4 alternatives are there for working with the GA4 export to BigQuery?

Extracting GA4 data into a data warehouse lets you work with the data however you want transcending the limits of the interface and combine your analytics data with other data sources for use in dashboards, machine learning, and in other products.

Exporting the data, however you do it, requires investments in time and money and you will almost certainly need some new skills in your organization to make use of the data if you are doing this for the first time.

The dbt-GA4 package is one of many ways that you can get value from your GA4 data outside of the GA4 interface. It has a number of advantages and drawbacks which means that it is not right for every situation.

There are three ways that you can work with this data:

- Use the native GA4 export to BigQuery

- Use the API export to any warehouse

- Use a hybrid solution to any warehouse

Use the native GA4 export to BigQuery

This group of options uses GA4’s native export to BigQuery.

The advantages of the native export are as follows:

- Flexibility: you can do pretty much anything that you want with your data

- Cost: the export is free and BigQuery has a generous free-tier on top of the current low cost of storage and processing that is common to all data warehouses

- Accuracy: the raw events undergo only light processing with no algorithms being run to reduce costs at the expense of accuracy Data pipeline applications that forward the native export to another cloud warehouse Processing data brings both advantages and disadvantages. You should weight these against each other when comparing options for working with your data.

The disadvantages of the native export are as follows:

- Labor: you will need to replicate some processing that Google does in the interface and in the API export and this takes time

- Expertise: in order to use the export, you need someone who knows about the raw export data schema and BigQuery SQL

- Vendor lock-in: the native export requires BigQuery; if your organization does not use Google Cloud, then you’ll need add Google to the cloud vendors that you manage

- No historical data: the native export only starts recording data from the first day that you set it up

You have several options that build on the native export:

- Data transformation applications

- dbt with the dbt-GA4 package

- Google Dataform

- Analytics applications that use the native export as a data source

- Analytics Canvas

Each of these options shares the advantages of the category, some have their own unique advantages, but they all mitigate the disadvantages in different ways.

DBT with the dbt-GA4 package

The dbt-GA4 package distinguishes itself from the other options in this category by being a widely-used, open-source platform and by reducing the amount of time needed to build out report-ready tables.

Because it is open-source, more people contribute to the package which means that there are more eyes on the code who catch more edge-case issues.

Additionally, dbt is not restricted to just GA4 data, like many of the other options here. Its open-source nature means that there are a host of other packages developed by the community that should save you time when working with other data sources.

If your data warehouse plans stretch beyond Google Analytics data, then this is a major advantage.

Because dbt is so pervasive, many ETL and ELT tools use dbt for the transformation portion of their platforms, there is more talent available. As a warehouse-agnostic tool, it also makes it easier for people who are not familiar with the BigQuery flavor of SQL to work with BigQuery data with a minimal learning curve.

The amount of time saved by using dbt-GA4 over developing your own transformations is substantial: it probably saves about 40+ hours between development time, learning the intricacies of the data source, and fixing some of the more difficult to spot data quality issues in the raw data.

Google Dataform

Google Dataform is the Google Cloud-native data transformation platform. Its main advantage is that it is a native platform but it lacks the package ecosystem of dbt.

There are a number of positive consequences that result from being a native platform:

- Interface lives in BigQuery next to your data

- Integration to Google’s AI coding tools

While the lack of a well-developed package ecosystem hurts Dataform, interface streamlining and AI should improve productivity.

AI, in particular, has a lot of potential where it understands the field formats in the source data. From there, the AI only really needs to understand data modeling techniques, which follow some well-defined patterns that AI should handle really well, and what people need to use the data for.

Analytics Canvas

There are several analytics products built on top of the native GA4 export, but Analytics Canvas is the overwhelming choice among data professionals with a flexible and mature product.

It should be said that Analytics Canvas can be configured to work with either the API export or the raw BigQuery export.

Analytics Canvas also keeps adding integrations to other data sources so it is more than just an analytics tool for working with GA4 data. It is obviously not as flexible as a data warehouse that lets you combine compatible ETL/ELT tools to pull data from anywhere that has an API but it is easier to work with.

Use the API export to any warehouse

Where data is concerned, you usually work with APIs by using an ETL or ELT platform to pull the data from the API to your warehouse although direct connections between applications are more common in general computing. The best way to get data from most platforms is usually via an API because the data is accurate and the API can be used by any application that wants to pull the data.

This is not the case with GA4 Data API.

In the GA4 Reports section of the interface, Google obfuscates data when data volumes are low and it groups data that has high cardinality, which is when there are a lot of possible values for dimensions.

At low data volumes, Google is protecting the identities of individuals. Usually, you will see rows of data with the same minimum numbers (often 7) for basic counting metrics like users, sessions, and page views and no values below that when data is subject to thresholding.

With high-cardinality dimensions, Google is limiting the costs of offering analytics by combining rows which means that Google spends less on scanning your data to deliver reports.

Cardinality is the biggest problem most people have with GA4 and the API export that works off of the processed tables because it can affect up to 70 percent of your data grouping dimensions under an “(other)” label. This can lead to losing important information on things like campaign performance that can cause your analytics data to be nearly useless.

Another problem with the API export is that you need to define the dimensions and metrics that you need and there are limits on what you can request.

This is fine for stable requirements, but it makes it hard to enable exploratory analytics with API export data as you need to anticipate every combination of dimensions that someone might want to explore when setting up the integration. Exploratory analytics is much better served by the raw data.

The main advantages of the API export are that you do not need to set up infrastructure on BigQuery if you are using a different warehouse and any ETL or ELT tool that you are using probably already has an integration that uses the GA4 Data API.

Use a hybrid solution to any warehouse

Hybrid solutions work off of the native export to BigQuery but then, usually via an ETL/ELT platform, forward that data to your main warehouse.

The only reason to use a hybrid solution over any of the previous options is that your main warehouse is on something other than Google Cloud.

The big disadvantage of a hybrid solution is that it means you have to manage a BigQuery project in Google Cloud in addition to your main warehouse. You will also have to account for BigQuery-specific data structures that do not automatically translate to other warehouses.

The main advantages are that hybrid solutions let you centralize your data, simplifying access to data consumers, and let you work off of the native GA4 BigQuery export which does not have the problems that API data does.

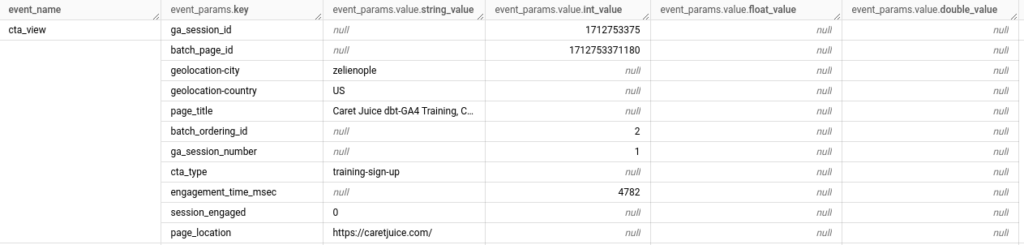

There is one main obstacle when implementing a hybrid solution and that is Google’s liberal use of the BigQuery-specific record and repeated record data structures. You can see repeated records in the below screenshot.

The event_name column has a single value and there many columns not shown in the screenshot like event_timestamp and user_pseudo_id that have one value per row.

However, the event_params has several sub-columns, like key, value.string_value, and value.int_value, and it has several sub-rows. It is like a table within a table.

In other warehouses, this would be represented as a separate event_params table that would have an event_key column that you would use to join to your main events table as well as columns corresponding to key, string_value, int_value etc… from the screenshot.

There are two basic hybrid approaches that pull data from the native GA4 BigQuery export to your target warehouse:

- Use a managed service to fix warehouse compatibility and forward the data

- Fivetran

- Snowflake

- Fix the warehouse compatibility issues in BigQuery and use an ETL/ELT platform to forward the data

- Implement a minimal fix for the compatibility issues in BigQuery, forward the data and do most of your modeling in your target warehouse

- Use the dbt-GA4 package and forward the data marts to your target warehouse

Use a managed service to fix warehouse compatibility and forward the data

Because of severe limitation on the GA4 API a number of applications use the native BigQuery export and forward the raw data to other cloud analytics warehouses, like RedShift, Snowflake, and Databricks, in a format that is compatible with those warehouses.

Two examples of this are Fivetran, an ELT tool that also has an API integration to GA4, and Snowflake, a cloud warehouse that builds on top of AWS, Google Cloud Platform, or Microsoft Azure.

These applications use BigQuery as an intermediary source between GA4 and the destination warehouse but they abstract away the BigQuery infrastructure and handle the data transfer.

This form of integration should minimize the management needs of the GA4 export to BigQuery when you use another cloud warehouse as your primary warehouse while letting you take advantage of the more flexible and robust data that you get from the native GA4 export to BigQuery.

Because of severe limitation on the GA4 API a number of applications use the native BigQuery export and forward the raw data to other cloud analytics warehouses, like RedShift, Snowflake, and Databricks, in a format that is compatible with those warehouses.

Fix the warehouse compatibility issues in BigQuery and use an ETL/ELT platform to forward the data

Fixing warehouse compatibility issues in BigQuery will require that you do some modeling in BigQuery which means that you will need to actively manage BigQuery in addition to actively managing your main warehouse.

Implement a minimal fix for the compatibility issues in BigQuery, forward the data and do most of your modeling in your target warehouse

If you implement a minimal fix for compatibility issues, you will end up exporting the following tables from the source events_* or events_intraday_*tables in the native GA4 export to BigQuery:

- events

- event_params

- user_properties

- items

Each repeated record, event_params, user_properties, and items, in the source data will get its own table while ordinary, non-repeated records, will need to be converted to columns in the events table.

You will need to generate an event_key to tie data between all tables but then you will be ready to export your data to your main warehouse.

The big advantage of this structure is that, once it is set up, you will not need to touch BigQuery again. Even when someone creates new events or adds new event parameters to existing events in GA4, this data structure will ready the data for your target warehouse without modification. You will still need to create new models or modify existing ones in your main warehouse when changes are made, but BigQuery is covered by this data schema.

Use the dBT-GA4 package and forward the data marts to your target warehouse

You can also use the dbt-GA4 package to do the modeling work and then forward your flattened, report-ready data marts to your target warehouse.

The two main advantages of this approach are that it should speed up the modeling process while improving data quality.

Using the dbt-GA4 package should get you report-ready data marts faster than modeling the minimal, compatibility export as described above or working with a managed solution, which likely give you the same base tables as the compatibility export. This is especially true if you already use dbt for modeling in your main warehouse as your dbt knowledge should largely translate to the dbt-GA4 package and BigQuery.

Having been deployed on thousands of sites, the package also accounts for quirks in the data that would otherwise require deep knowledge of GA4 and the export to BigQuery.

For example, the user_pseudo_id field is not actually a unique ID. The dbt-GA4 package accounts for this without you needing to know.

Another example is how the package fixes inconsistencies with how GA4 handles paid traffic from Google Ads. Google presumes that GA4 and Google Ads use the native integration between the two products for attribution. However, the export to BigQuery does not include attribution based on this integration resulting in Google Ads traffic being labeled as organic search traffic. The package fixes this for you.

If you were to work with this data without fairly strong knowledge of the source data, you will likely miss issues like these that will degrade data quality in various ways resulting in bad data and upset stakeholders.

The big drawback of this approach is that you will need to update your BigQuery dbt project every time someone adds a new event or event parameter in GA4. This may then require changes in your ETL/ELT platform and changes in your target warehouse.

This cascading chain of changes needs to be managed and the inter-related dependencies may require the addition of an orchestration tool which amounts to more complexity in your data pipelines.

GA4 Data Exports

GA4 data is more complex than most other data sources.

The native export is fundamentally event stream data. Event stream data is common in modern SaaS applications thanks to its flexibility, which is also why GA4 uses this format, but the API exports from those applications are usually of more processed data downstream from a source event stream so data engineers do not see event stream data that often even though it is a common application data structure.

The API export suffers from limitations imposed primarily to reduce costs which is fair for a free product and these limitations are greatly reduced on the paid version of GA4.

Branching off from these two basic data export formats are a variety of options that are suitable for different organizations with different levels of expertise in analytics, SQL, data engineering, and more.

There is no one correct solution for all organizations which is why it is important to understand your options before committing to one solution or another.